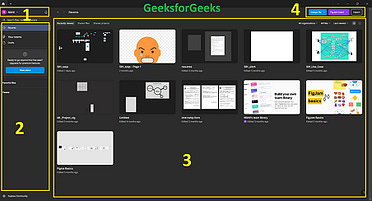

If your validation can’t disprove the idea, it’s not validation.Why validation often protects the wrong beliefs and how founders pay for it laterWhen Doing Everything Right Still Goes WrongFor a long time, BlackBerry was doing exactly what serious companies are told to do. They listened to their best customers. Enterprise clients renewed contracts. Governments trusted their security. Power users typed thousands of emails a week on physical keyboards that were objectively faster than touchscreens. Internally, decisions were grounded in metrics that had worked for years: reliability, encryption, battery life, and administrative control. When touchscreen phones started gaining attention, BlackBerry didn’t ignore them. They evaluated them. And by BlackBerry’s standards, they were worse. Slower to type on. Less secure. Less suited for people who live in email all day. There was nothing reckless about staying the course. Around the same period, Boeing found itself under a different kind of pressure. Airlines wanted better fuel efficiency. Competitors were moving fast. Timelines mattered. Boeing introduced a new automated control system into an existing aircraft design and ran it through established certification processes. Simulators were used. Failure modes were reviewed. Pilot training requirements were evaluated. Each step produced the same outcome: trained pilots could recognize the system’s behavior and respond correctly. The documentation was complete. The reviews were formal. The approvals were granted. Again, nothing about this looked careless. In both cases, the people involved believed they were doing the right thing. They weren’t guessing. They weren’t ignoring warning signs. They were following the process, relying on data, and validating decisions the way competent organizations are supposed to. At the time, there was no obvious reason to think something important was being missed. That only became clear later. What is happening at the core human level when this occursWhen cases like BlackBerry or Boeing are discussed after the fact, they are often framed as failures of judgment or vision. That framing is comforting because it suggests the problem was a lack of intelligence or courage. But that is rarely what is actually happening. At the human level, this pattern has very little to do with competence. It is driven by a much deeper instinct about how we decide what is true. Humans have a strong tendency to confuse coherence with correctness. When a belief fits the data we already have, aligns with past success, survives structured review, and feels internally consistent, it acquires a sense of solidity. It stops feeling provisional. It starts feeling real. Not in a philosophical sense, but in a practical one. It feels safe enough to act on. At that point, validation quietly changes its role. Instead of being a way to surface uncertainty, it becomes a way to manage discomfort. It reduces anxiety by showing that the decision is defensible. It justifies commitment by demonstrating that due process was followed. It legitimizes action by making it feel earned rather than impulsive. None of this is usually conscious. People do not sit in rooms thinking about protecting beliefs. They think they are being careful. Responsible. Methodical. But the outcome is that validation systems are shaped less by what could disprove a belief and more by what makes that belief feel stable enough to proceed. They are designed to feel rigorous and look comprehensive while quietly preserving the assumption they are meant to test. That is why these failures are so hard to see from the inside. The process is doing exactly what it is psychologically rewarded to do. The science underneathFrom a cognitive and neurological perspective, this behavior is not surprising. The human brain is optimized for action under uncertainty, not for eliminating uncertainty. Large parts of the prefrontal cortex are devoted to integrating incomplete information into coherent models that can guide decisions. This makes humans exceptionally good at pattern completion, narrative construction, and explaining outcomes after the fact.

Once a belief has formed, the brain tends to treat it as a working model rather than a hypothesis. New information is filtered through it. Evidence that fits is easier to encode and recall. Evidence that conflicts are more effortful to process and easier to dismiss as noise or an exception. This is not a flaw in the moral sense. It is an efficiency mechanism. Maintaining multiple competing interpretations of reality is metabolically expensive. Actively trying to falsify beliefs threatens more than correctness. It threatens identity, competence, and status. It forces people to confront the possibility that their prior reasoning, expertise, or judgment may not generalize as well as they thought. As a result, unless a system is explicitly designed to force disconfirmation, the brain will naturally drift toward belief protection. It will seek closure, coherence, and justification long before it seeks falsification.

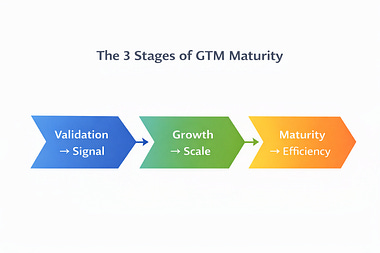

This is why simply telling people to “be more objective” or “challenge assumptions” rarely works. The default cognitive machinery is working against that goal. Without structural pressure, validation will almost always converge toward reassurance rather than exposure. Why this matters for foundersFounders operate in environments where uncertainty is not a phase but a constant. Feedback arrives late, often distorted by politeness or noise. The consequences of being wrong are asymmetric. Small mistakes compound quietly, while correct decisions rarely announce themselves until much later. This is all the more relevant for the first GTM stage i.e validaiton phase. In that setting, validation feels like a form of discipline. It is a way to slow down impulse, to avoid self-deception, to show that a decision is grounded rather than imagined. Most founders are not trying to be reckless. They validate precisely because they are trying to be careful. The problem is not the intent. It is what validation gradually turns into. Instead of being a way to surface what might be wrong, validation often becomes a way to earn the right to move forward. It functions as a permission structure. A signal that enough boxes have been checked. A mechanism for generating confidence that feels earned rather than assumed. At that point, validation stops being about truth and starts being about justification. It reassures teams. It calms investors. It allows founders to commit without feeling irresponsible. And because it feels responsible, it is rarely questioned. This is what makes founders especially vulnerable. In an environment where action is required before certainty is possible, validation quietly shifts from a tool for exposing fragility into a moral license to proceed. The more uncertain the terrain, the more tempting that shift becomes. When founders did it wrong at validation phaseIn 2011, Bill Nguyen had reason to be confident. He had raised tens of millions of dollars for Color, backed by some of the most respected investors in Silicon Valley. The technology worked. Photos appeared instantly on nearby phones, grouped automatically, without anyone having to add friends or curate feeds. When Nguyen showed the product, people nodded. They got it. Engineers admired the architecture. Investors praised the ambition. In the weeks before launch, validation looked positive. People said photo sharing was important. They said the experience felt magical. They said the idea was interesting. What no one asked was what it would take for someone to actually use it on a Tuesday afternoon. Using Color meant opening a new app instead of the ones people already checked habitually. It meant trusting an unfamiliar social context. It meant changing how moments were captured and shared, without any immediate pull from existing relationships. None of that surfaced during validation, because validation never forced users to make a tradeoff. It asked whether the idea made sense, not whether it would displace behavior that was already working. When Color launched, people did not reject it. They simply didn’t reorganize their habits around it. The idea survived the explanation. It did not survive indifference. Validation had confirmed that the concept was reasonable. It had never tested whether behavior would actually change when there was friction, choice, and no one watching. A decade earlier, a similar pattern played out with Webvan, founded by Louis Borders. On paper, Webvan looked inevitable. Customers wanted groceries delivered. Surveys said so. Market research supported it. Early pilots showed interest. The logic was clean: groceries were a frequent, universal need, and delivery would save time. Borders validated aggressively and expanded fast, building infrastructure ahead of demand to meet what seemed like an obvious future. But grocery shopping is not just a transaction. It is a habit, embedded in routines, price sensitivity, and trust built over the years. Using Webvan required customers to plan differently, pay differently, and give up control over selection and timing. Those costs were small individually, but cumulative in practice. Validation never forced those costs into view. It confirmed that delivery sounded appealing. It did not test whether people would consistently alter their weekly behavior enough to support the economics required to make the model work. When reality arrived, interest was not the problem. Inertia was. In both stories, the founders did what they believed responsible founders should do. They validated demand. They gathered feedback. They built coherent narratives around why adoption would follow. What they validated was reasonableness. Startups do not fail because ideas are unreasonable. They fail because behavior does not move. Habits dominate attention. Existing workflows resist replacement. Constraints assert themselves quietly and persistently. Validation that does not force people to pay a real cost in time, effort, money, or discomfort cannot reveal whether a product will actually live in the world. It can only show that the idea sounds good while nothing is at stake. That is not product validation. It is plausibility testing. What happens when founders do it rightWhen Dylan Field started working on what would become Figma, the idea itself was easy to dismiss. Serious design tools lived on desktops. They were heavy, powerful, and local. Browsers were slow, unreliable, and not where professionals did real work. Field’s belief cut directly against that norm. He thought designers would accept a browser-based tool if collaboration became immediate and friction disappeared. It was a fragile belief. Years of habit stood against it. So he did not ask designers what they thought. He built a minimal browser-based editor and invited a small group to use it for actual projects. No promises. No migration paths. No safety net. If designers treated it as a curiosity and returned to their desktop tools, the belief would end there. Some did. But enough designers stayed. They shared links instead of files. They edited together in real time. They did work that mattered inside a tool that was not supposed to support it. They did not argue that it could work. They simply worked. The belief survived because behavior changed, not because the idea sounded convincing. Years earlier, something similar happened with Shopify, though in a very different domain. Tobi Lütke did not start Shopify to build a platform. He started it to run his own online store. The belief forming quietly behind the tool was specific and testable: non-technical merchants would set up and operate online stores themselves if the software removed enough friction. That belief could not survive explanation alone. So Lütke released the tool and watched what happened. Did merchants configure stores on their own? Did they list products? Did they accept payments? Did they launch without help? If every store required hand-holding, the belief would collapse quickly. Many did not. Merchants signed up, configured storefronts, and started selling without talking to anyone. Not because they were persuaded, but because the cost of trying was low and the payoff was immediate. The software either fit into their behavior or it didn’t. In enough cases, it did. What these stories share is not luck or foresight. It is how validation was framed at the very first point of contact with the market. In both cases, the founders created situations where being wrong would be cheap and obvious. Beliefs were exposed to real behavior early, before confidence hardened into identity. Validation did not provide reassurance. It replaced it with information. When a belief survives an honest attempt to disprove it, it earns strength. This is what real validation looks like at GTM stage one. Where Validation Breaks DownWhat makes this kind of validation hard is not ignorance but pressure. Early on, belief holds everything together. It aligns teams, reassures investors, and creates momentum before there is much evidence to lean on. Tests that can fail threaten that belief at the exact moment it feels most necessary. There is also an emotional cost. Early disproof feels less like learning and more like personal failure. Founders are deeply identified with their ideas, and environments around them reward confidence, not uncertainty. As a result, validation quietly shifts from exposing fragility to protecting morale and legitimacy. That is why this is not a methodological problem but a philosophical one. Founders who do this well stop asking whether an idea sounds right and start asking what would make it wrong. They design tests that can falsify beliefs and treat belief death as progress. The goal is not reassurance. It is removing wrongness before reality does it for you. - Before you build anything, make sure someone wants it enough to pay. I put together a free 7-day email course on revenue-first customer discovery — how to pull real buying intent from real conversations (without guessing, overbuilding, or hoping). If you’re a builder who wants clarity before code: |

Monday, January 26, 2026

If your validation can’t disprove the idea, it’s not validation.

Subscribe to:

Post Comments (Atom)

Adaptive Pricing Isn’t New - We Just Gave It Algorithms

From street vendors to surge pricing, this essay explores why real-time pricing depends on signal maturity - not just data or AI models. ͏ ...

-

Crypto Breaking News posted: "Mikhail Fedorov, Ukraine's Deputy Prime Minister and the head of the country's Minist...

-

kyungho0128 posted: "China's crackdown on Bitcoin (BTC) mining due to energy consumption concerns is widely regarded as...

-

lovesarahjacobs posted: "Download our free Coinbase pro app and receive signals on your mobile – https://play.google.com/st...

No comments:

Post a Comment